BulletArm Baselines

This subpackage implements a collection of state-of-the-art baseline algorithms to benchmark new methods against. The algorithms provided cover a wide range of state and action spaces for either open-loop control or close-loop control. Additionally, we provide a number of logging and plotting utilities for ease of use.

Open-Loop Benchmarks

Prerequisite

Install PyTorch (Recommended: pytorch==1.7.0, torchvision==0.8.1)

(Optional, required for 6D benchmark) Install CuPy

Install other required packages

pip install -r baseline_requirements.txt

Goto the baseline directory

cd helping_hands_rl_envs/helping_hands_rl_baselines/fc_dqn/scripts

Open-Loop 2D Benchmarks

python main.py --algorithm=[algorithm] --architecture=[architecture] --action_sequence=xyp --random_orientation=f --env=[env]

Select

[algorithm]from:sdqfd(recommended),dqfd,adet,dqnSelect

[architecture]from:equi_fcn(recommended),cnn_fcnAdd

--fill_buffer_deconstructto use deconstruction planner for gathering expert data.

Open-Loop 3D Benchmarks

python main.py --algorithm=[algorithm] --architecture=[architecture] --env=[env]

Select

[algorithm]from:sdqfd(recommended),dqfd,adet,dqnSelect

[architecture]from:equi_asr(recommended),cnn_asr,equi_fcn,cnn_fcn,rot_fcnAdd

--fill_buffer_deconstructto use deconstruction planner for gathering expert data.

Open-Loop 6D Benchmarks

python main.py --algorithm=[algorithm] --architecture=[architecture] --action_sequence=xyzrrrp --patch_size=[patch_size] --env=[env]

Select

[algorithm]from:sdqfd(recommended),dqfd,adet,dqnSelect

[architecture]from:equi_deictic_asr(recommended),cnn_asrSet

[patch_size]to be40(recommended, required for bumpy_box_palletizing environment) or24Add

--fill_buffer_deconstructto use deconstruction planner for gathering expert data.

Additional Training Arguments

Close-Loop Benchmarks

Prerequisite

Install PyTorch (Recommended: pytorch==1.7.0, torchvision==0.8.1)

Install other required packages

pip install -r baseline_requirements.txt

Goto the baseline directory

cd helping_hands_rl_envs/helping_hands_rl_baselines/equi_rl/scripts

Close-Loop 3D Benchmarks

python main.py --algorithm=[algorithm] --action_sequence=pxyz --random_orientation=f --env=[env]

Select

[algorithm]from:sac,sacfd,equi_sac,equi_sacfd,ferm_sac,ferm_sacfd,rad_sac,rad_sacfd,drq_sac,drq_sacfd

Close-Loop 4D Benchmarks

python main.py --algorithm=[algorithm] --env=[env]

Select [algorithm] from:

sac,sacfd,equi_sac,equi_sacfd,ferm_sac,ferm_sacfd,rad_sac,rad_sacfd,drq_sac,drq_sacfdTo use PER and data augmentation buffer, add

--buffer=per_expert_aug

Additional Training Arguments

Logging & Plotting Utilities

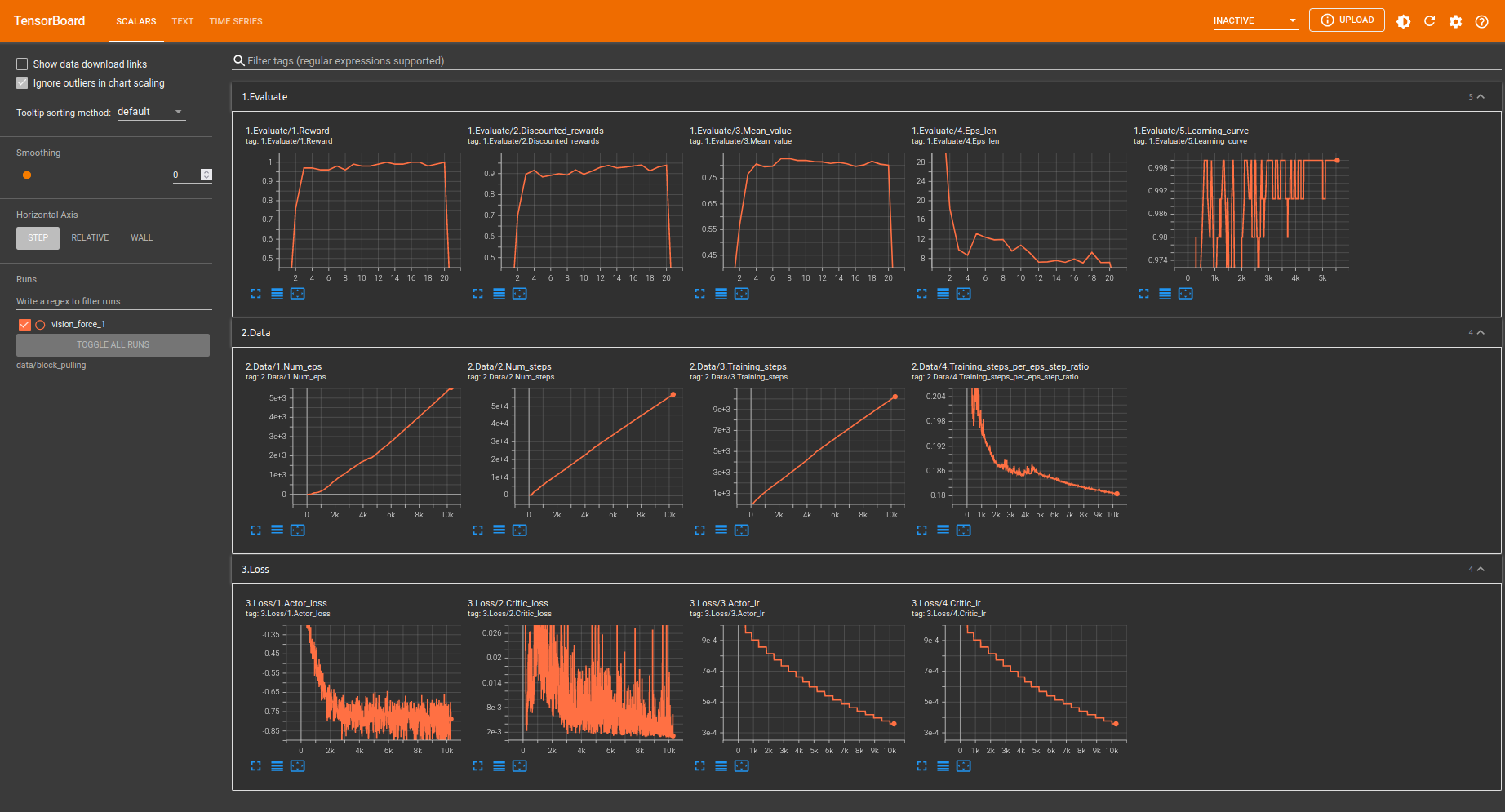

To assist with debugging new algorithms, we include the logging and plotting tools which we use for the baselines. The logger wraps Tensorboard

and provides numerous functions to log various details of the training process. An example of the information the logger displays can be seen below.

In addition to the default data, any additional data can be added as desired using the updateScalars() function. The plotter is currently in

its infancy but provides a easy way to plot and compare different algorithms when using the provided logger.

An example run showing the default information captured by the logger.

Logger

- class Logger(results_path, checkpoint_interval=500, num_eval_eps=100, hyperparameters=None)[source]

Logger class. Writes log data to tensorboard.

- Parameters

results_path (str) – Path to save log files to

num_eval_eps (int) – Number of episodes in a evaluation iteration

hyperparameters (dict) – Hyperparameters to log. Defaults to None

- exportData()[source]

Export log data as a pickle

- Parameters

filepath (str) – The filepath to save the exported data to

- getAvg(l, n=0)[source]

Numpy mean wrapper to handle empty lists.

- Parameters

l (list[float]) – The list

n (int) – Number of trail elements to average over. Defaults to entire list.

- Returns

List average

- Return type

float

- getCurrentLoss(n=100)[source]

Calculate the average loss of previous n steps :param n: the number of previous training steps to calculate the average loss

- Returns

the average loss value

- getScalars(keys)[source]

Get data from the scalar log dict.

- Parameters

keys (str | list[str]) – Key or list of keys to get from the scalar log dict

- Returns

- Single object when single key is passed or dict containing objects from

all keys

- Return type

Object | Dict

- loadCheckPoint(checkpoint_dir, agent_load_func, buffer_load_func)[source]

Load the checkpoint

- Parameters

checkpoint_dir – the directory of the checkpoint to load

agent_load_func (func) – the agent’s loading checkpoint function. agent_load_func must take a dict as input to load the agent’s checkpoint

buffer_load_func (func) – the buffer’s loading checkpoint function. buffer_load_func must take a dict as input to load the buffer’s checkpoint

- logEvalEpisode(rewards, values=None, discounted_return=None)[source]

Log a evaluation episode.

- Parameters

(list[float] (rewards) – Rewards for the episode

values (list[float]) – Values for the episode

discounted_return (list[float]) – Discounted return of the episode

- logStep(rewards, done_masks)[source]

Log episode step.

- Parameters

rewards (list[float]) – List of rewards

done_masks (list[int]) –

- logTrainingEpisode(rewards)[source]

Log a episode.

- Parameters

(list[float] (rewards) – Rewards for the entire episode

- saveCheckPoint(agent_save_state, buffer_save_state)[source]

Save the checkpoint

- Parameters

agent_save_state (dict) – the agent’s save state for checkpointing

buffer_save_state (dict) – the buffer’s save state for checkpointing

- saveParameters(parameters)[source]

Save the parameters as a json file

- Parameters

parameters – parameter dict to save